Older people who are not engaged in social and mental activities show faster mental decay. Sadly, the limited amount of human resources and large amount of workload in nursery homes tends to limit the time careteakers have for social estimulating tasks. In order to assist on this efforts we have worked on the AKTIV project, in which a virtual persona addresses by name the residents in an elderly home and encourages them to play some simple games and engage in conversations with fellow residents.

Artificial Intelligence for Medicine and Health

We develop novel methods for medical computer vision applications such as medical image analysis tools, multi-modal medical models and interactive medical models, where we put emphasis on sustainability through data-efficient training.

We work on projects with industry partners (e.g., previously Zeiss), in the context of graduate school programs (e.g., HIDSS4HEALTH) and in other research contexts (e.g., KiKIT).

We are part of KITHealthTech where we ask the question: How can we digitalize technology and processes in healthcare?

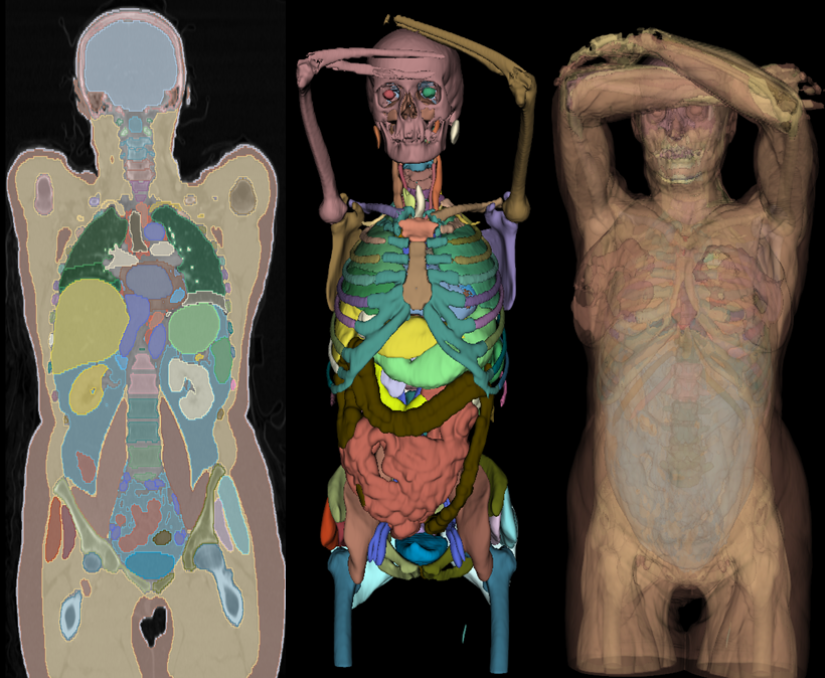

Computer-aided Diagnostic Systems Grounded in Medical Knowledge

We published multiple medical datasets enriched with high quality, automatically obtained human anatomy labels for X-ray images (BMVC, dataset) and CT scans (ICIP, dataset). With this auxiliary information on anatomical structures, we develop methods that can make use of it and thereby improve the performance on disease segmentation (MICCAI). Our research also advances the evaluation of medical segmentation models. We propose CC-Metrics, an adaptation to commonly used metrics to better reflect the capability of a model to discover instances as opposed to only finding large segments, which is important in contexts such as tumor segmentation (AAAI).

Medical Practitioners in the loop: Harnessing Interactivity between Doctors and AI

↵

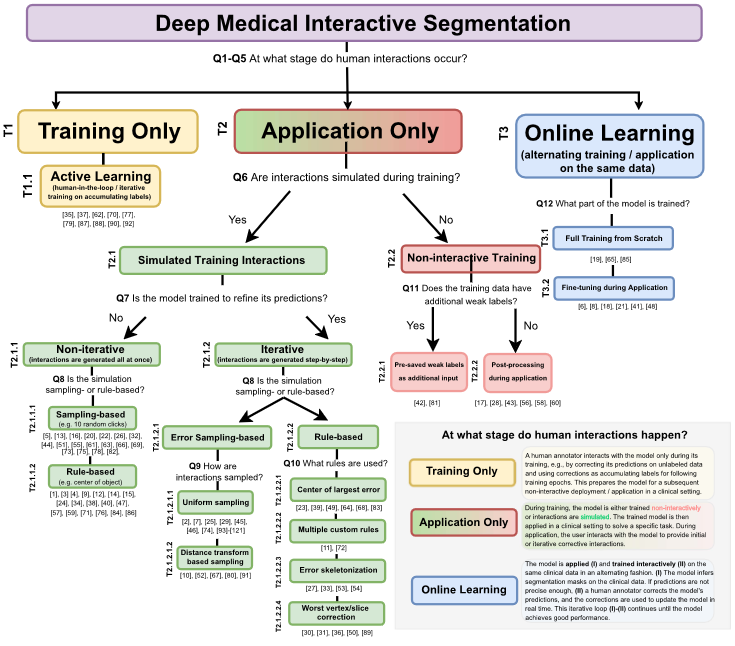

AI systems can be used to automatically process medical data and directly gather insights based on this data, or they can be constructed as interactive models, where a medical doctor stands in direct collaboration with the system. Such an interactive design can accelerate how fast medical knowledge can be gathered from an expert to train better medical image analysis models.

In our work on interactive models, we analyzed the literature in a systematic review, discovering a taxonomy for deep medical interactive segmentation models (TPAMI). Further, we explored how to best integrate cues given by medical doctors into interactive deep learning models (MICCAI) and explored techniques to make interactive models faster (ISBI). We regularly participate (NATURE machine intelligence) and organize medical interactive segmentation challenges.

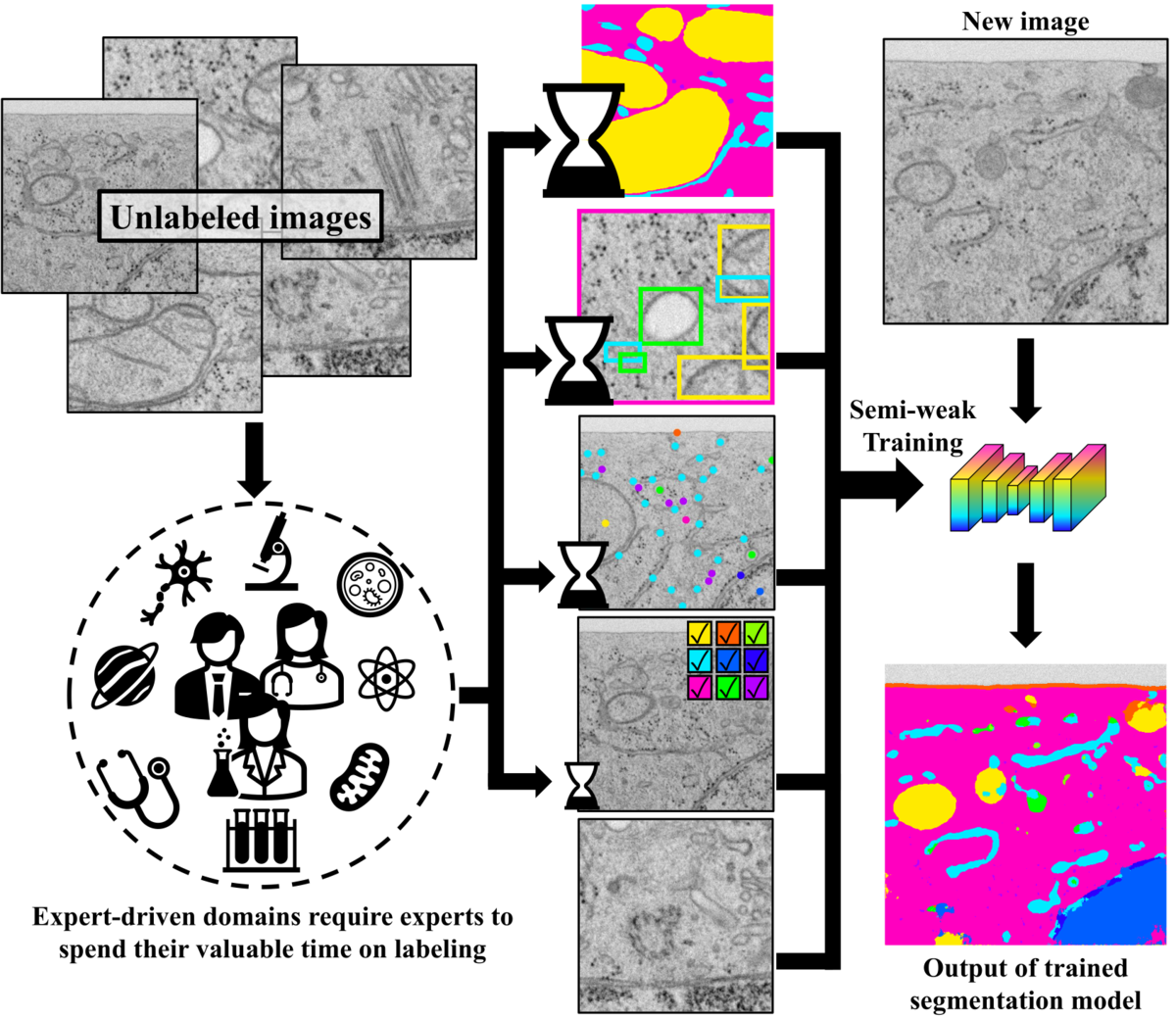

Learning with less: Medical AI in Scarce Data Scenarios

A central problem in artificial intelligence systems is the requirement to train them on large datasets with expensive annotations. Furthermore, in the medical domain, doctors are needed to create these annotations, as they have the expertise on how to interpret, e.g., medical images. To this end, solutions are needed to make it possible to train models with only very few annotations and to make the process of training these systems as flexible as possible to best accommodate the expert’s time.

We propose training strategies for data efficient training (CVPR, AAAI), where with only a handful of annotations, we are able to train models for semantic segmentation with only minor performance loss as compared to models trained with hundreds of annotations. Further, we research training techniques that add flexibility to the annotation process by accepting highly heterogeneous training signals that can be used to train medical segmentation models (CVPR, ECCV). We also investigate the adaptation of pre-trained models towards new data distributions without the need to collect expensive pixel-wise annotations (ISBI).

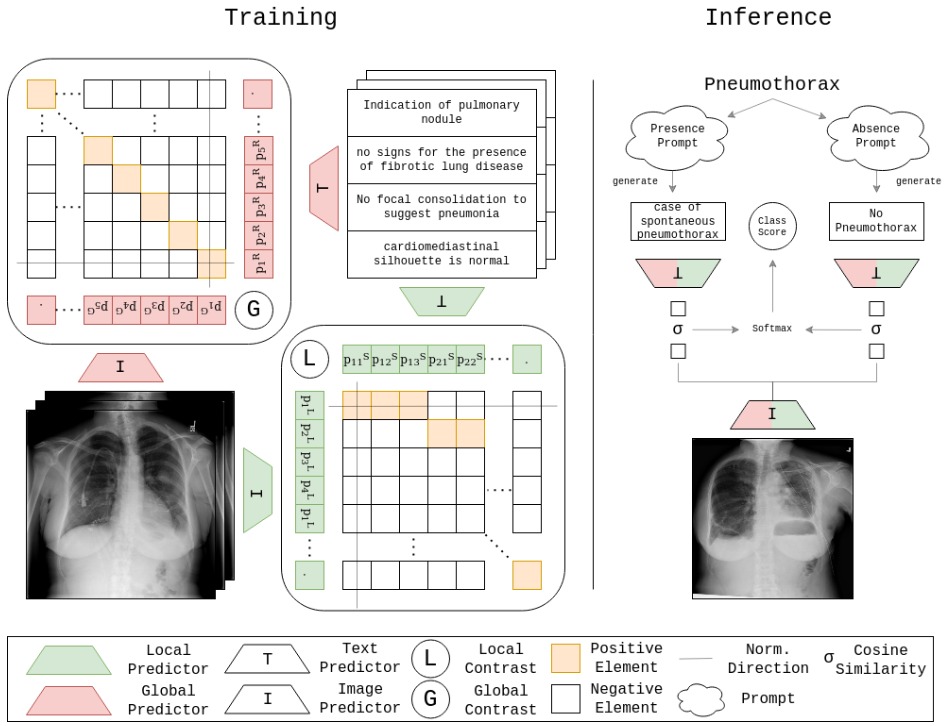

Multi-modal AI in Medicine: From OCT, X-ray and CT to Natural Language

The medical domain is comprised of highly multi-modal data. A wide range of different imaging modalities are used to gather insights into a patient’s health, some of which are optical coherence tomography, computed tomography, magnetic resonance imaging or X-ray scans. On top, in medical day-to-day routines textual data in the form of reports accumulates.

In our research, we bring together different imaging modalities to benefit from the complimentary information they offer and thereby train better deep learning models (ICCV-W). We also showed that medical images and radiological reports can be utilized to train classification models without having to provide additional explicit labels while still enabling open-set recognition (MICCAI).

Sleep Monitoring

Under VIPSAFE and SPHERE projects we have worked on several sleep monitoring tasks:

- Breath Analysis

- Sleep Position

- Agitation Quantification

- Action Recognition

We aim to provide better and safer care in Intensive Care Rooms, and improve sleep quality for the elderly in nursery homes and ageing-at-home setups.

Publications List

-

Interactive Segmentation and Annotation of Medical Images. PhD dissertation

Marinov, Z.

2026, February 25. Karlsruher Institut für Technologie (KIT). doi:10.5445/IR/1000190952 -

Leveraging Anatomical Knowledge Across the Model Development Lifecycle for Medical Image Segmentation. PhD dissertation

Jaus, A.

2025, November 26. Karlsruher Institut für Technologie (KIT). doi:10.5445/IR/1000186998 -

MICA: Multi-Agent Industrial Coordination Assistant

Wen, D.; Peng, K.; Zheng, J.; Chen, Y.; Shi, Y.; Wei, J.; Liu, R.; Yang, K.; Stiefelhagen, R.

2025. arxiv. doi:10.48550/arXiv.2509.15237 -

LIMIS: Towards Language-Based Interactive Medical Image Segmentation

Heinemann, L.; Jaus, A.; Marinov, Z.; Kim, M.; Spadea, M. F.; Kleesiek, J.; Stiefelhagen, R.

2025. 2025 IEEE 22nd International Symposium on Biomedical Imaging (ISBI), 1–5, Institute of Electrical and Electronics Engineers (IEEE). doi:10.1109/ISBI60581.2025.10981190 -

Every Component Counts: Rethinking the Measure of Success for Medical Semantic Segmentation in Multi-Instance Segmentation Tasks

Jaus, A.; Seibold, C. M.; Reiß, S.; Marinov, Z.; Li, K.; Ye, Z.; Krieg, S.; Kleesiek, J.; Stiefelhagen, R.

2025. Proceedings of the AAAI Conference on Artificial Intelligence, 3904 – 3912, Association for the Advancement of Artificial Intelligence (AAAI). doi:10.1609/aaai.v39i4.32408 -

MedShapeNet – a large-scale dataset of 3D medical shapes for computer vision

Li, J.; Zhou, Z.; Yang, J.; Pepe, A.; Gsaxner, C.; Luijten, G.; Qu, C.; Zhang, T.; Chen, X.; Marinov, Z.; et al.

2025. Biomedical Engineering / Biomedizinische Technik, 70 (1), 71–90. doi:10.1515/bmt-2024-0396 -

Conquering the Retina: Bringing Visual in-Context Learning to OCT

Negrini, A.; Reiß, S.

2025. arxiv. doi:10.48550/arXiv.2506.15200 -

Semantic Segmentation for Preoperative Planning in Transcatheter Aortic Valve Replacement

Zöllner, C.; Reiß, S.; Jaus, A.; Sholi, A.; Sodian, R.; Stiefelhagen, R.

2025. arxiv. doi:10.48550/arXiv.2507.16573 -

Is Visual in-Context Learning for Compositional Medical Tasks within Reach?

Reiß, S.; Marinov, Z.; Jaus, A.; Seibold, C.; Sarfraz, M. S.; Rodner, E.; Stiefelhagen, R.

2025. arxiv. doi:10.5445/IR/1000187365 -

Taking a Step Back: Revisiting Classical Approaches for Efficient Interactive Segmentation of Medical Images

Marinov, Z.; Jaus, A.; Kleesiek, J.; Stiefelhagen, R.

2025. Medical Image Segmentation Foundation Models. CVPR 2024 Challenge: Segment Anything in Medical Images on Laptop – MedSAM on Laptop 2024, Held in Conjunction with CVPR 2024, Seattle, WA, USA, June 17–21, 2024, Proceedings. Ed.: J. Ma, 101–125, Springer Nature Switzerland. doi:10.1007/978-3-031-81854-7_7 -

OneBEV: Using One Panoramic Image for Bird’s-Eye-View Semantic Mapping

Wei, J.; Zheng, J.; Liu, R.; Hu, J.; Zhang, J.; Stiefelhagen, R.

2025. Computer Vision – ACCV 2024 : 17th Asian Conference on Computer Vision, Hanoi, Vietnam, December 8–12, 2024, Proceedings, Part X. Ed.: M. Cho, 377–393, Springer Nature Singapore. doi:10.1007/978-981-96-0972-7_22 -

Behind Every Domain There is a Shift: Adapting Distortion-aware Vision Transformers for Panoramic Semantic Segmentation

Zhang, J.; Yang, K.; Shi, H.; Reiß, S.; Peng, K.; Ma, C.; Fu, H.; Torr, P. H. S.; Wang, K.; Stiefelhagen, R.

2024. IEEE Transactions on Pattern Analysis and Machine Intelligence, 46 (12), 8549–8567. doi:10.1109/TPAMI.2024.3408642 -

Style Transfer and Pseudo-Label Filtering Improve Transferability in Cell Organelle Segmentation Scenarios

Seletkov, D.; Reiß, S.; Freytag, A.; Seibold, C.; Stiefelhagen, R.

2024. 2024 IEEE International Symposium on Biomedical Imaging (ISBI), Institute of Electrical and Electronics Engineers (IEEE). doi:10.1109/ISBI56570.2024.10635796 -

LIMIS: Towards Language-Based Interactive Medical Image Segmentation

Heinemann, L.; Jaus, A.; Marinov, Z.; Kim, M.; Spadea, M. F.; Kleesiek, J.; Stiefelhagen, R.

2024. doi:10.48550/arXiv.2410.16939 -

Every Component Counts: Rethinking the Measure of Success for Medical Semantic Segmentation in Multi-Instance Segmentation Tasks

Jaus, A.; Seibold, C. M.; Reiß, S.; Marinov, Z.; Li, K.; Ye, Z.; Krieg, S.; Kleesiek, J.; Stiefelhagen, R.

2024. doi:10.48550/arXiv.2410.18684

.png)